Madison Leonard is an author of this article.

Far from mere tools, employee surveys and interviews serve as key indicators of an organization’s overall health and success. They play a pivotal role in assessing corporate culture, gauging satisfaction, gathering feedback, improving retention, and measuring diversity. Surveys can quantify sentiment—often over multiple periods and population segments. Interviews supplement surveys by offering nuance, context, the opportunity to follow up and even multiple perspectives on a single topic. These qualitative responses are essential for identifying subtle concerns, uncovering issues not explicitly covered by standard questions, and understanding employee sentiment. Without these insights, assessments risk missing critical information about how employees feel about governance, compliance and other key topics.

Despite their importance, surveys and interviews come with their own set of challenges. They are resource-intensive and susceptible to human error. The process of choosing survey topics and questions is a delicate one. While quantitative survey results are clear-cut, the qualitative feedback from interviews and focus groups, often in the form of lengthy, nuanced responses, demands significant mental effort to categorize and interpret. The larger the dataset, the higher the risk of confirmation bias, where reviewers may focus on responses that align with their expectations or miss critical patterns in the data. This bias, coupled with the sheer volume of information to be processed, makes it difficult to conduct thorough and objective assessments, especially under time and budget constraints.

Artificial Intelligence (AI), specifically Large Language Models (LLMS), presents a compelling solution to these challenges. By automating both quantitative and qualitative data analysis, AI models can swiftly sift through large datasets, identifying patterns, contradictions, and sentiment across thousands of responses in a fraction of the time it would take a human reviewer. This time-saving aspect of AI is particularly beneficial in today’s fast-paced business environment, where efficiency and productivity are paramount.

In addition to speed, AI helps mitigate the risk of human error and bias. It allows professionals to review qualitative data thoroughly and impartially. Unlike the human eye, a well-trained AI model can efficiently process voluminous and complex text, uncovering insights without overlooking critical information.

But, as powerful as AI may be, human judgment and experience remain critical. Human input is necessary to refine, train and ultimately verify the accuracy of an AI model.

The Case Study

This article uses an employee survey case study to describe how AI streamlined the process, reduced the workload, and provided deeper, more objective insights. Specifically, we discuss how the team applied AI to identify critical themes from interview transcripts, which informed the survey topics and questions, and analyzed employee survey results and follow-up focus groups.

Preliminary Interviews

The process began with interviews of key personnel before creating the survey. Our AI model extracted key themes from interview transcripts, which informed the topics and helped develop survey questions.

The Survey

The team developed and deployed a survey to over 300 employees, asking questions about themes our AI model and analysts identified. The survey had a 90% completion rate and generated a data set with over 300 respondent results.

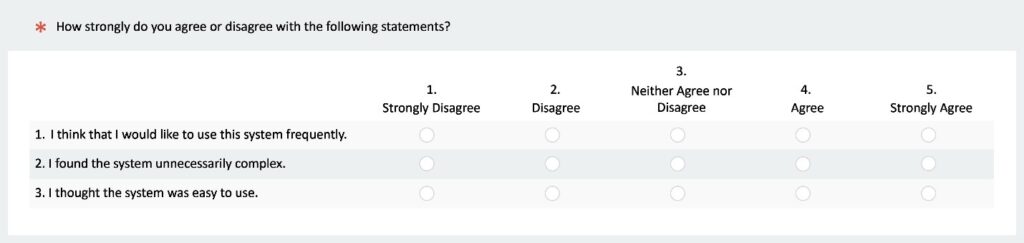

The survey posed sixty-six “Likert scale” questions. These questions asked employees to rank their agreement or disagreement with a statement on a scale, producing quantitative results. See below for an example:

The responses to these questions generated a dataset of quantitative feedback with data points relating to employee agreement or disagreement. Our AI model quickly identified and analyzed the number of employees who generally agreed or disagreed with each question and the strength of that sentiment (e.g., partially v. strongly).

The survey concluded with two open-ended questions. One question aimed to understand employee perceptions of themes covered by the Likert scale questions. The other asked employees about their perceptions of themes not covered by the Likert scale questions to gather general feedback and context relevant to the review not otherwise covered.

AI Analysis of Survey Results

Our AI model compared the quantitative Likert scale results and open-ended, qualitative answers. The AI model used the Likert scale responses to determine the general sentiment of employees, whereas the qualitative feedback from the open-ended questions allowed for a more nuanced analysis.

Our AI model functioned like a chatbot, allowing the client to ask questions answered in a narrative.[1] For example, the model read through open-ended responses and created a table counting the number of mentions per governance theme and summarizing suggestions for improvement per governance theme.

Our AI model also compared the qualitative and quantitative feedback. In less than one hour, the model ingested over 300 employee responses and performed an analysis to identify where the qualitative feedback was not consistent with quantitative feedback. The AI model employed a three-step approach to make the comparison:

- Identify themes mentioned in the open-ended response.

- Determine the sentiment of the open-ended response by instructing the model to review the natural language response for keywords traditionally associated with positive or negative sentiment and provide specific quotations as support.

- Compare this sentiment to the sentiment the Likert scale responses reflected.

For example, here is how our AI model identified that a respondent had contradicting quantitative and qualitative feedback regarding an issue relating to compliance.

- The model identified “compliance process” in the respondent’s open-ended responses.

- Next, the model summarized the open-ended responses and determined that the respondent had negative sentiments toward the firm’s compliance processes.[2]

- The model came to an opposite conclusion when it analyzed responses to Likert scale questions.

- The model determined that the Likert scale responses indicated a positive sentiment towards the firm’s compliance processes.

- The output supported this conclusion with three Likert scale responses where the employee “agreed” that the firm’s compliance processes were adequate.[3]

Our AI model revealed that while quantitative feedback initially suggested positive sentiment, qualitative responses pointed to negativity, challenging the initial analysis. This nuanced conclusion would not have been made without our AI model’s review of the qualitative data. Human interpretation was essential in grasping the significance of this discrepancy.

Observations

Save Time & Mitigate Bias

AI is not only about speed. Humans are subject to confirmation bias, the human brain has limitations, and reviews of this volume are resource intensive.

Bias can be illustrated through an example: suppose you have six surveys to review. You can summarize the quantitative feedback relatively quickly, but analyzing the natural language responses requires more brain power. Each respondent mentions different compliance processes, and you have difficulty discerning positive or negative sentiments.

Now, imagine this review is multiplied by 50, and you have 300 surveys to analyze. Because of time and cost constraints, reviewers must create individualized logic to optimize their review, especially considering the time required to analyze 300 natural language responses. Instead of performing a detailed analysis of every response, a reviewer may focus on responses containing specific buzzwords. Therefore, qualitative reviews are innately biased because an individual’s unique experiences help drive a particular logic to guide decision-making.[4]

It is challenging to tune out our innate human biases when reviewing survey results for large populations of individuals. AI provides a solution where we can complete a comprehensive review at a large scale without subjecting ourselves to confirmation bias. In this sense, we can feel confident in the objective nature of our insights and achieve those insights in a large-scale, cost- and time-efficient manner.

But Humans Remain Critical

Although AI operates more efficiently than the human brain, human judgment and experience remain critical. We arrived at our model through an iterative and collaborative process. Throughout the project, we tailored the model for the specific needs of the review through a continued dialogue between our data analytics and compliance professionals.

- Our compliance experts identified essential compliance themes based on their experience as a precursor to building the model. These themes functioned as a starting point for our data analytics professionals, who constructed the model.

- Once the model was constructed, our compliance experts created test questions to generate output from the model.

- Feedback from our compliance experts regarding the output was essential to refining and training our model. The compliance experts reviewed the output to identify areas for improvement, and the data analytics team adjusted the model based on this feedback.

Separately, it is essential to lean on experts to verify the accuracy of an AI model. Compliance professionals are accustomed to diligence, which is especially important when implementing new technologies. In a now-famous case, a New York lawyer leveraged ChatGPT to perform legal research that yielded cases and quotations that did not exist, a phenomenon called AI “hallucination.” [5]

This example underscores how paramount diligence is concerning AI, and our case study is no exception. In this case study, we implemented a type of AI model known as an LLM, which, despite its advantages, is not perfect. As such, we required the AI model to provide specific quotations as support. This allowed us to verify the accuracy of the output generated by our model. Our experts reviewed the underlying survey responses for each output set to confirm that quotations were pulled out accurately and remediated inaccuracies.

In a simple example, our compliance experts identified instances where the model interpreted any mention of “agree” as a positive sentiment. However, this included “disagree” because “agree” exists within that string text; our team subsequently adjusted the criteria to omit that rationale. This example illustrates how humans will continue to play a critical role in implementing AI and relying upon AI driven conclusions.

In addition to the value of expertise provided by compliance professionals, there is greater value when we combine this with the expertise of data analytics professionals. Our data analytics and compliance team collaboration enabled us to implement AI for more complex use cases. In our case, the data analyst was well-versed in LLMs and helped our compliance experts grasp the capabilities and limitations of an LLM – which stretched far beyond what they initially understood. This supports the conclusion that both professionals must collaborate to create an advanced model useful in a particular context.

Conclusion

AI accelerates the processing of both quantitative and qualitative employee feedback. By swiftly identifying patterns and inconsistencies, AI helps organizations analyze large datasets more efficiently, saving time and mitigating human error or bias.

While AI is highly effective in analyzing data, human expertise remains crucial in fine-tuning AI models and interpreting nuanced insights. Collaboration between compliance professionals and data analysts ensures that AI tools are aligned with organizational needs and produce reliable results.

If you have any questions or would like to find out more about this topic please reach out to Michael Costa or Jonny Frank.

To receive StoneTurn Insights, sign up for our newsletter.

[1] Our model can be separated from popular chatbots like ChatGPT because of its enterprise grade security and confidentiality of sensitive client data. Furthermore, our model is an LLM which performs complex analyses of natural language on a large scale, which cannot be performed by popular chatbots.

[2] The model concluded that the respondent had a negative sentiment towards compliance processes because the respondent described the compliance processes as “complex” and “inefficient” and suggested that the firm centralize its compliance processes.

[3] The model determined that the Likert scale responses indicated a positive sentiment towards the firm’s compliance processes based on three Likert scale responses where the respondent “agreed” that the firm’s compliance processes were adequate.

[4] See M. Bazerman & A. Tenbrunsel, Ethical Breakdowns, Harvard Business Review (2011)https://hbr.org/2011/04/ethical-breakdowns (discussing how cognitive biases distort ethical decision making), the same cognitive biases may also distort our analysis); see also M. Costa, Bias in Compliance: How to Identify and Address It, Hyperbeat (2022) https://venturebeat.com/datadecisionmakers/bias-in-compliance-how-to-identify-and-address-it/ (StoneTurn data analytics partner, Michael Costa, discusses the dangers of confirmation bias in compliance).

[5] l. Moran, Lawyer Cites Fake Cases Generated by ChatGPT in Legal Brief, LegalDive (2023) https://www.legaldive.com/news/chatgpt-fake-legal-cases-generative-ai-hallucinations/651557/.